Would you like to use Wallpapers.com in English?

Mobile Wallpapers

(1100+ Mobile Wallpapers)

Enhance your phone's appearance with our collection of stunning mobile wallpapers. Choose from a variety of themes and styles to personalize your device and stand out from the crowd.

-

![Solid Dark Grey Faded Wallpaper]()

Solid Dark Grey Faded Wallpaper -

![Custom Made Samurai Mobile Wallpaper]()

Custom Made Samurai Mobile Wallpaper -

![Solid Dark Grey Monochrome Background Wallpaper]()

Solid Dark Grey Monochrome Background Wallpaper -

![Mobile Blue Cloudy Sky Wallpaper]()

Mobile Blue Cloudy Sky Wallpaper -

![Screen Of Badly Broken Glass Wallpaper]()

Screen Of Badly Broken Glass Wallpaper -

![Rainbow Flower iPhone Digital Art Wallpaper]()

Rainbow Flower iPhone Digital Art Wallpaper -

![Cool Mom Best Mom Ever On Black Wallpaper]()

Cool Mom Best Mom Ever On Black Wallpaper -

![Dog On Marshall Islands Wallpaper]()

Dog On Marshall Islands Wallpaper -

![Colorful Aesthetic Dream Catcher Wallpaper]()

Colorful Aesthetic Dream Catcher Wallpaper -

![Carlos Sainz Jr In A Motorcade Wallpaper]()

Carlos Sainz Jr In A Motorcade Wallpaper -

![Dreamy Pink Sunset Clouds Wallpaper]()

Dreamy Pink Sunset Clouds Wallpaper -

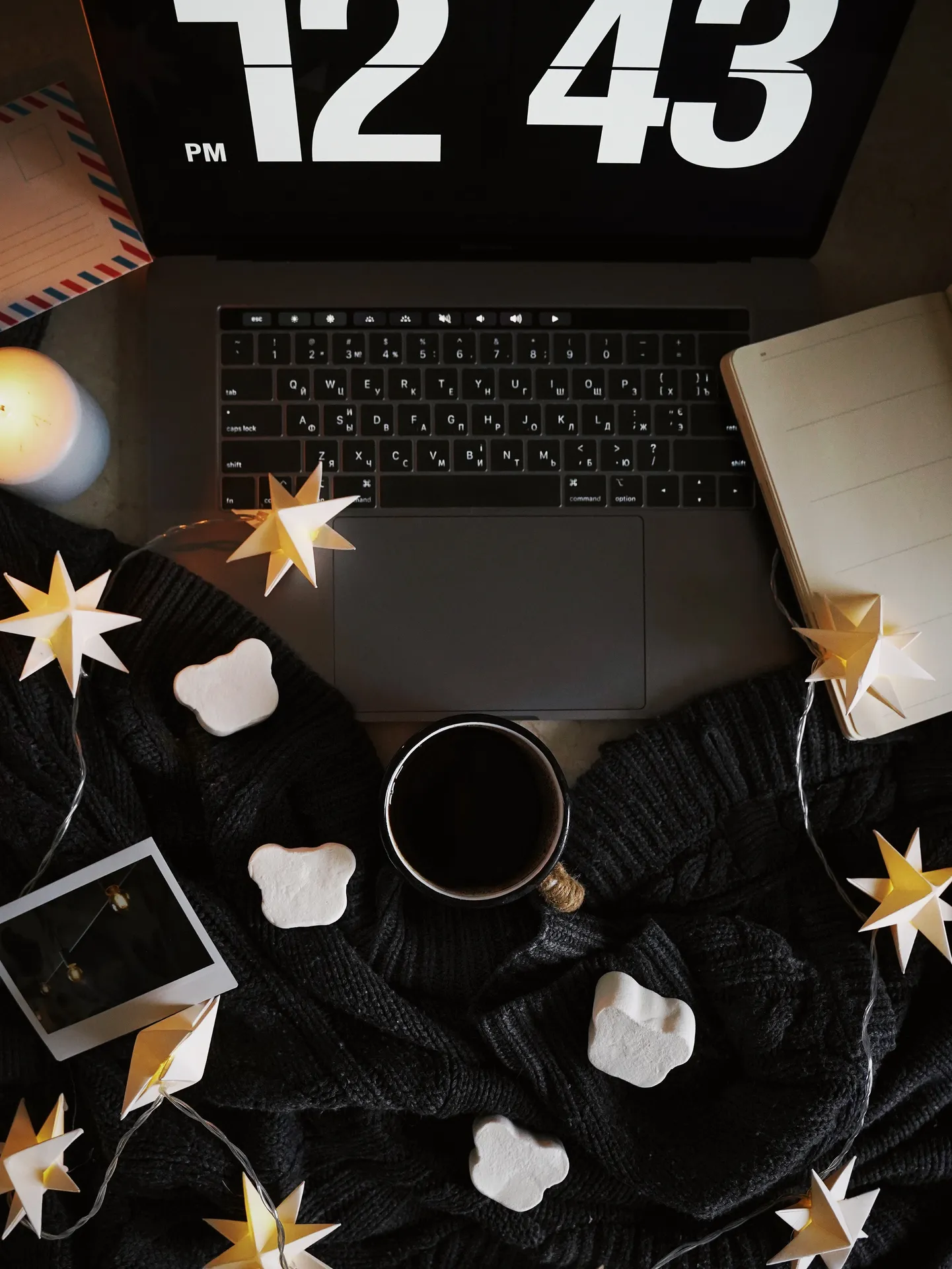

![New Year iPhone Stars Night Sky Wallpaper]()

New Year iPhone Stars Night Sky Wallpaper -

![Azadi Tower Night Red Glow Wallpaper]()

Azadi Tower Night Red Glow Wallpaper -

![Initial D Phone Logo And Car Wallpaper]()

Initial D Phone Logo And Car Wallpaper -

![Corpse Bride Victor And Victoria Wallpaper]()

Corpse Bride Victor And Victoria Wallpaper -

![A Green Dragon With A Sword In His Hand Wallpaper]()

A Green Dragon With A Sword In His Hand Wallpaper -

![Hunk Model Male Face Wallpaper]()

Hunk Model Male Face Wallpaper -

![Fluid Colors Apple Iphone X Wallpaper]()

Fluid Colors Apple Iphone X Wallpaper -

![Apple Pay Later Hit Roadblock Wallpaper]()

Apple Pay Later Hit Roadblock Wallpaper -

![Totally Indifferent Man Wallpaper]()

Totally Indifferent Man Wallpaper -

![Cute Light Purple Planets Doodles Wallpaper]()

Cute Light Purple Planets Doodles Wallpaper -

![Slaying A Dragon For Iphone Screens Wallpaper]()

Slaying A Dragon For Iphone Screens Wallpaper -

![Three Ladies Silhouette Mobile Wallpaper]()

Three Ladies Silhouette Mobile Wallpaper -

![Neon Cross Vaporwave Aesthetic Wallpaper]()

Neon Cross Vaporwave Aesthetic Wallpaper -

![Apple Iphone X City Road River Skyscrapers Wallpaper]()

Apple Iphone X City Road River Skyscrapers Wallpaper -

![Neon Quotes Pink Ice Cream Solves Everything Wallpaper]()

Neon Quotes Pink Ice Cream Solves Everything Wallpaper -

![Basketball Aesthetic Neon Aesthetic Wallpaper]()

Basketball Aesthetic Neon Aesthetic Wallpaper -

![Halloween Costume Skeleton Wallpaper]()

Halloween Costume Skeleton Wallpaper -

![Initial D Phone Drifting Car Wallpaper]()

Initial D Phone Drifting Car Wallpaper -

![Mobile Gravity Falls Wallpaper]()

Mobile Gravity Falls Wallpaper -

![Richard Attenborough Holding A Golden Trophy Wallpaper]()

Richard Attenborough Holding A Golden Trophy Wallpaper -

![iPhone X OLED Paint Splash Wallpaper]()

iPhone X OLED Paint Splash Wallpaper -

![Caption: Shattered Elegance - A Look into the Abyss of Broken Glass Wallpaper]()

Caption: Shattered Elegance - A Look into the Abyss of Broken Glass Wallpaper -

![LG G4 Red And Black Hydro Pattern Wallpaper]()

LG G4 Red And Black Hydro Pattern Wallpaper -

![Sunset And River Tonal Contrast Wallpaper]()

Sunset And River Tonal Contrast Wallpaper -

![Green Butterfly Luminous Flying Up Wallpaper]()

Green Butterfly Luminous Flying Up Wallpaper -

![The Iconic Turning Torso Skyscraper in Sweden Wallpaper]()

The Iconic Turning Torso Skyscraper in Sweden Wallpaper -

![Guanyu Zhou Pink Sneakers Wallpaper]()

Guanyu Zhou Pink Sneakers Wallpaper -

![Borderlands iPhone Black And White Character Wallpaper]()

Borderlands iPhone Black And White Character Wallpaper -

![Aesthetic Care Bear Cheer Bear Astronaut Wallpaper]()

Aesthetic Care Bear Cheer Bear Astronaut Wallpaper - Next page